MUNI Transit Analytics/Visualization Web-app

Overview

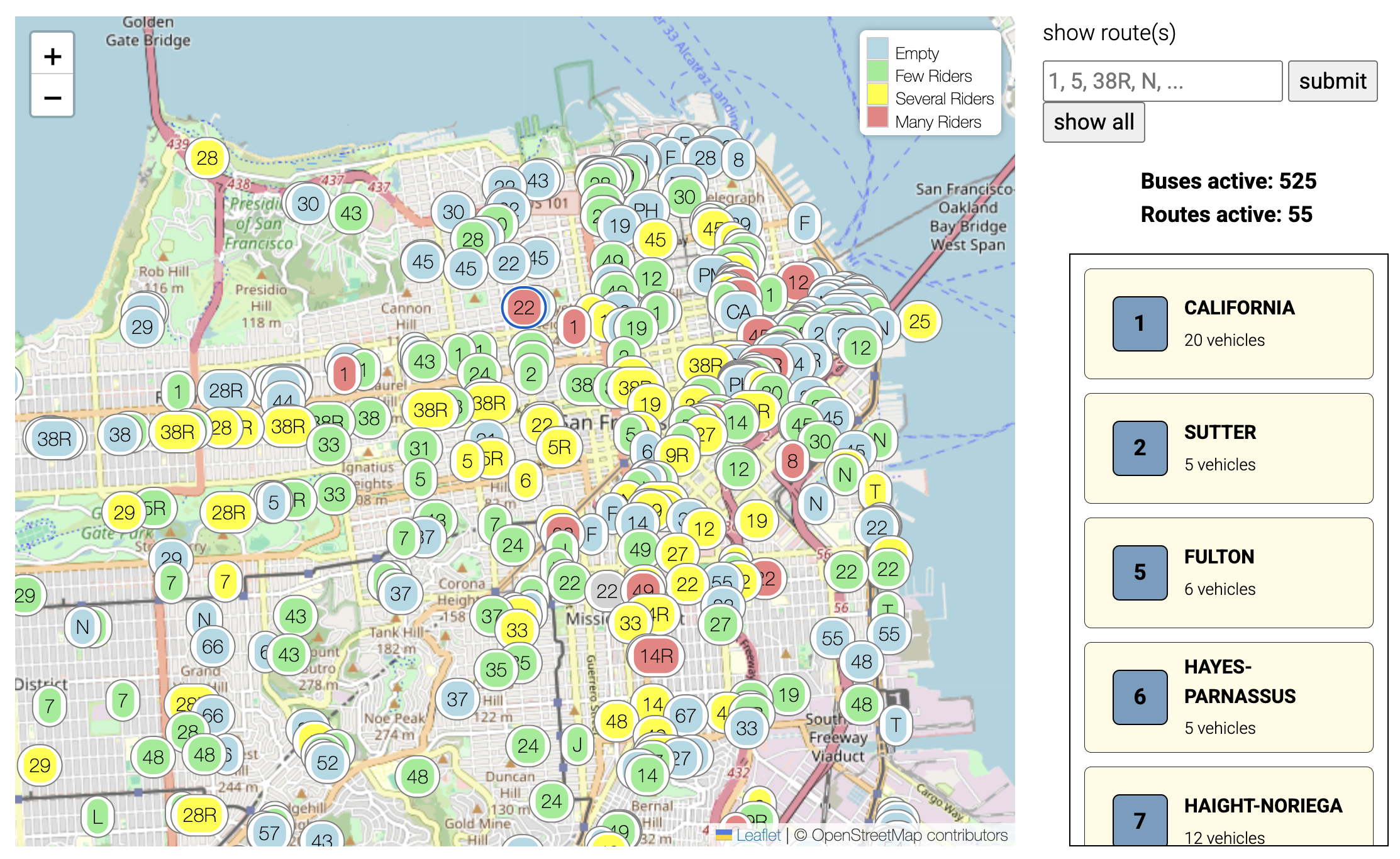

San Francisco’s Municipal Transportation Agency (SFMTA) serves realtime data on their vehicles through a GTFS API. I love public transportation and want to see it used to the fullest of its ability. To that end, this project creates a realtime visualization and analytics platform that serves the SFMTA data in a more transparent and accessible way than what can be found on the city’s web apps or other map providers.

Project Table of Contents